FastBTM: Reducing the Sampling Time for Biterm Topic Model

摘要

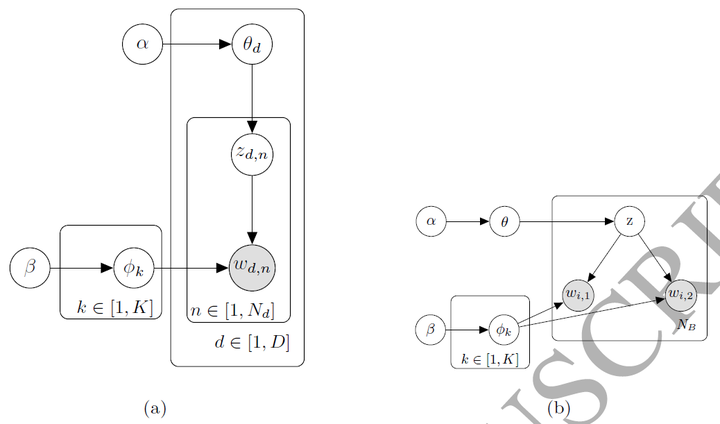

Due to the popularity of social networks, such as microblogs and Twitter, a vast amount of short text data is created every day. Much recent research in short text becomes increasingly significant, such as topic inference for short text. Biterm topic model (BTM) benefits from the word co-occurrence patterns of the corpus, which makes it perform better than conventional topic models in uncovering latent semantic relevance for short text. However, BTM resorts to Gibbs sampling to infer topics, which is very time consuming, especially for large-scale datasets or when the number of topics is extremely large. It requires O(K) operations per sample for K topics, where K denotes the number of topics in the corpus. In this paper, we propose an acceleration algorithm of BTM, FastBTM, using an efficient sampling method for BTM, which converges much faster than BTM without degrading topic quality. FastBTM is based on Metropolis-Hastings and alias method, both of which have been widely adopted in Latent Dirichlet Allocation (LDA) model and achieved outstanding speedup. Our FastBTM can effectively reduce the sampling complexity of biterm topic model from O(K) to O(1) amortized time. We carry out a number of experiments on three datasets including two short text datasets, Tweets2011 Collection dataset and Yahoo! Answers dataset, and one long document dataset, Enron dataset. Our experimental results show that when the number of topics K increases, the gap in running time speed between FastBTM and BTM gets especially larger. In addition, our FastBTM is effective for both short text datasets and long document datasets.