Abstract

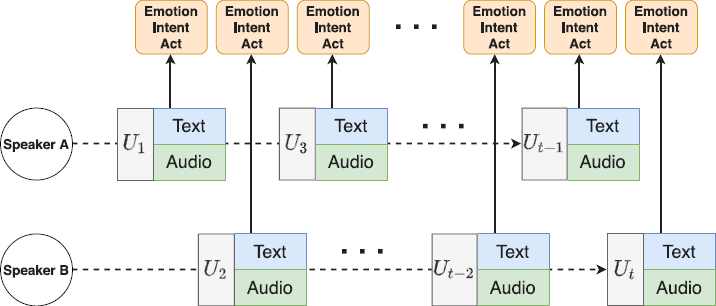

Conversation understanding, as a necessary step for many applications, including social media, education, and argumentation mining, has been gaining increasing attention from the research community. Reasoning over long-term dependent contextual information is the key to utterance-level conversation understanding. Aiming to emphasize the importance of contextual reasoning, an end-to-end graph attention reasoning network which takes both word-level and utterance-level context into concern is proposed. To be specific, a word-level encoder with a novel convolution gate is first built to filter out irrelevant contextual information. Based on the representation extracted by word-level encoder, a graph reasoning network is designed to utilize the context among utterance-level, where the entire conversation is treated as a fully connected graph, utterances as nodes, and attention scores between utterances as edges. The proposed model is a general framework for conversation understanding tasks, which can be flexibly applied on most conversation datasets without changing the network architecture. Furthermore, we revise the tensor fusion network by adding a residual connection to explore cross-modal conversation understanding. For uni-modal scene (text modality), experiments show that the proposed method surpasses current state-of-the-art methods on emotion recognition, intent classification, and dialogue act identification tasks. For cross-modal scenes (text modality and audio modality), experiments on IEMOCAP and MELD datasets show that the proposed method can use cross-modal information to improve model performance.