A joint model of extended LDA and IBTM over streaming Chinese short texts

Abstract

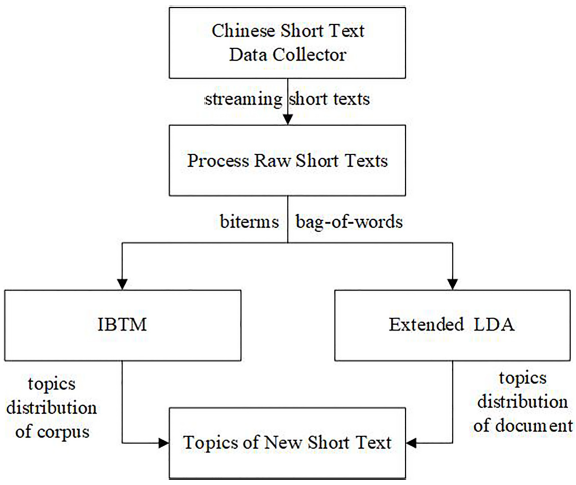

With the prevalent of short texts, discovering the topics within them has become an important task. Biterm Topic Model (BTM) is more suitable to discover topics on short texts than traditional topic models. However, there are still some challenges that dealing short texts with BTM will always ignore the document-topic semantic information and lack the true intentions of users. In addition, it is a static method and can not manage streaming short texts when a new one arrives immediately. In order to keep document-topic information and get the topic distribution of a new short text at once, we propose a joint model based on online algorithms of Latent Dirichlet Allocation (LDA) and BTM, which combines the merits of both models. Not only does it alleviate the sparsity when addressing short texts with the online algorithm of BTM, namely Incremental Biterm Topic Model (IBTM), but also keeps document-topic information with extended LDA. And considering the differences between English and Chinese text in writing, we use combined words in short texts as key words to extend the length of short texts and keep the true intensions of users. As shown in the experiment results on two real world datasets, our method is better than other baseline methods. In the end, we explain an application of our method in the task of discovering user interest tags.